57 Tokens Per Second. 200 Billion Parameters. Zero Limits.

DahReply’s high-capacity AI infrastructure is built for next-level fine-tuning, delivering unmatched processing speed and model scalability.

LLM Fine Tuning Service

With DahReply’s LLM fine-tuning, you can take a generic large language (LLM) model and refine it using your proprietary data, industry knowledge, and workflows—making it more accurate, efficient, and relevant to your needs.

Get responses trained on your business knowledge, reducing irrelevant answers and AI hallucinations by up to 50%.

We train LLMs for industry-specific tasks, such as legal document processing, medical diagnostics, and financial forecasting—giving you AI that speaks your language.

All fine-tuned models are deployed securely in our in-house self-hosted or private cloud environment, maintaining full compliance and data protection standards.

Fine-tuning builds on pre-trained models, meaning lower training costs, faster deployment, and higher efficiency compared to developing an AI model from scratch.

Inaccurate responses that leads to misinformation

Limited customisation

No industry adaptation

Shared cloud-based, increases data exposure.

Requires constant manual input, slows down decision-making and waste resources

Highly accurate responses, context-aware

Fully trained on your data

Understands industry jargon and needs

Private and secure deployment

Automates workflows, reducing costs

More Accurate. More Relevant. More Powerful.

Only with Dah Reply.

Fine-tuning starts with high-quality, domain-specific labeled data. We help you prepare, structure, and optimise datasets to ensure your AI model learns from the right information—improving accuracy and reducing errors.

Not all AI models are the same. We guide you in choosing the best model—whether a custom-built LLM or a pre-trained foundation—so that fine-tuning focuses on the tasks that matter most to your business, like text generation, classification, or document analysis.

AI fine-tuning isn’t just about training—it’s about optimising for performance. We fine-tune key hyperparameters like learning rate, batch size, and training epochs to ensure your AI model is accurate, efficient, and aligned with real-world business applications.

Your data stays yours. We deploy fine-tuned models in a self-hosted or private cloud environment, ensuring full security, compliance, and low-latency performance—so AI delivers insights in real time without risk.

DahReply’s high-capacity AI infrastructure is built for next-level fine-tuning, delivering unmatched processing speed and model scalability.

Have questions? Don’t worry—we’re here to guide you toward a solution that fits your needs perfectly.

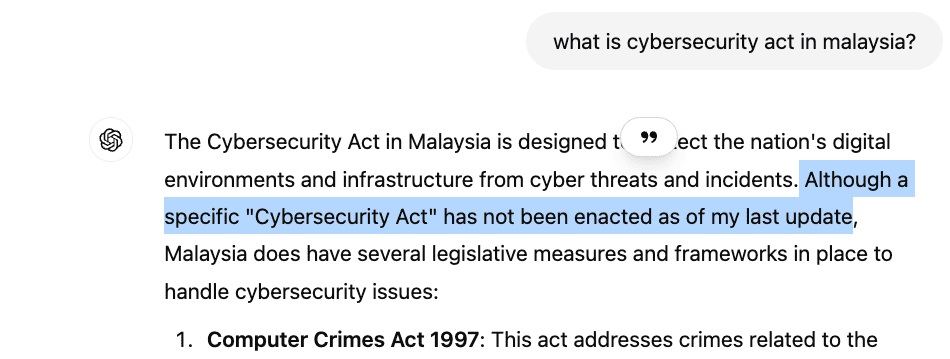

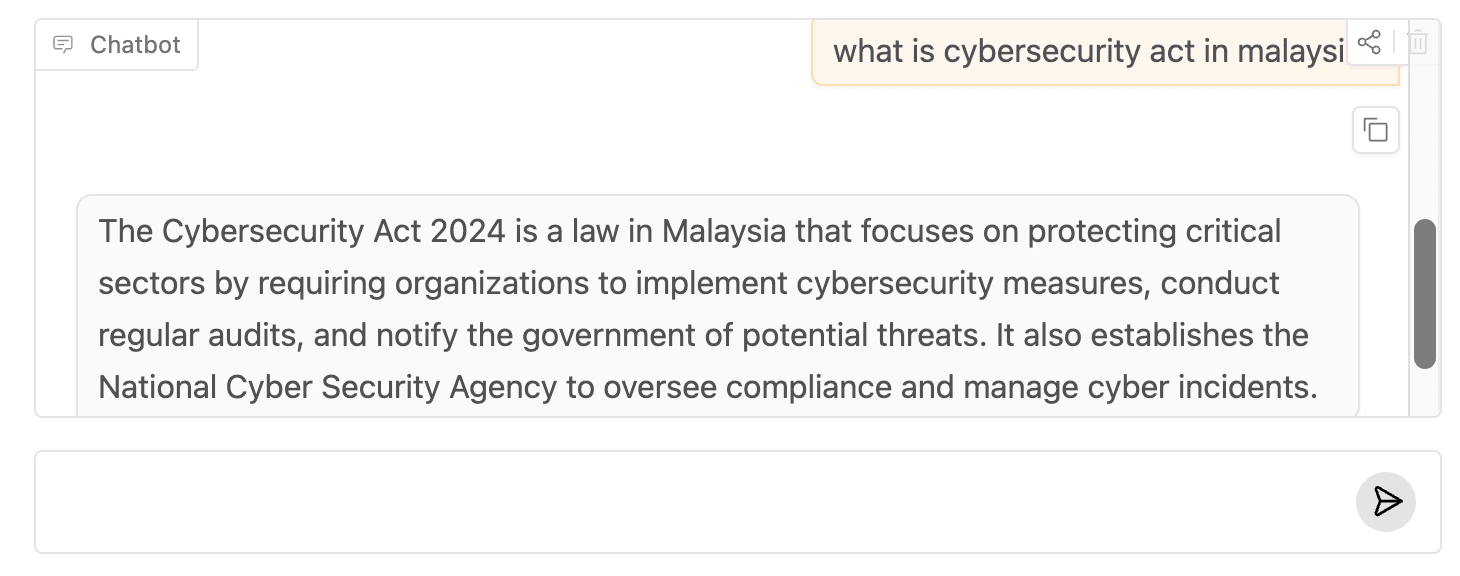

LLM Fine-Tuning is the process of custom-training an existing Large Language Model (LLM) using your business-specific data to improve its accuracy, relevance, and performance. This allows AI to generate more precise, context-aware responses tailored to your industry.

If your AI model produces generic, inaccurate, or irrelevant responses, struggles with industry-specific language, or requires better compliance and control, fine-tuning will optimise it to deliver precise, high-quality interactions that align with your business needs.

Yes! Your AI continuously learns from new interactions, feedback, and additional data, making it smarter and more efficient over time.

DahReply fine-tunes AI using enterprise-grade infrastructure with NVIDIA RTX A6000 GPUs and 1024GB DDR5 memory, allowing for faster and more scalable model training. AI models are customised with your business data to improve accuracy and performance. Security and compliance are prioritised, with options for self-hosted or private cloud deployment. Fine-tuned models are optimised for efficiency, reducing training costs, improving response times, and delivering more relevant AI-driven interactions.

Contact us today to learn more about how our AI solutions can help your business grow and succeed.